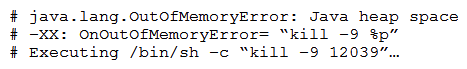

An operations team notices that a few AWS Glue jobs for a given ETL application are failing. The AWS Glue jobs read a large number of small JSON files from an Amazon S3 bucket and write the data to a different S3 bucket in Apache Parquet format with no major transformations. Upon initial investigation, a data engineer notices the following error message in the History tab on the AWS Glue console: "Command Failed with Exit Code 1." Upon further investigation, the data engineer notices that the driver memory profile of the failed jobs crosses the safe threshold of 50% usage quickly and reaches 90-95% soon after. The average memory usage across all executors continues to be less than 4%. The data engineer also notices the following error while examining the related Amazon CloudWatch Logs.  What should the data engineer do to solve the failure in the MOST cost-effective way?

What should the data engineer do to solve the failure in the MOST cost-effective way?

A) Change the worker type from Standard to G.2X.

B) Modify the AWS Glue ETL code to use the 'groupFiles': 'inPartition' feature.

C) Increase the fetch size setting by using AWS Glue dynamics frame.

D) Modify maximum capacity to increase the total maximum data processing units (DPUs) used.

Correct Answer:

Verified

Q73: A company has a data warehouse in

Q74: A media analytics company consumes a stream

Q75: A company has developed several AWS Glue

Q76: A company is migrating from an on-premises

Q77: A media company is using Amazon QuickSight

Q79: A company has an application that uses

Q80: A central government organization is collecting events

Q81: An operations team notices that a few

Q82: A company wants to use an automatic

Q83: A company is planning to create a

Unlock this Answer For Free Now!

View this answer and more for free by performing one of the following actions

Scan the QR code to install the App and get 2 free unlocks

Unlock quizzes for free by uploading documents