Deck 4: Lossless Compression Algorithms

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Unlock Deck

Sign up to unlock the cards in this deck!

Unlock Deck

Unlock Deck

1/11

Play

Full screen (f)

Deck 4: Lossless Compression Algorithms

1

Calculate the entropy of a "checkerboard" image in which half of the pixels are BLACK and half of them are WHITE.

1 bit/symbol.

2

Consider a text string containing a set of characters and their frequency counts as follows: A: (15), B: (7), C: (6), D: (6) and E: (5). Show that the Shannon-Fano algorithm produces a tree that encodes this string in a total of 89 bits, whereas the Huffman algorithm needs only 87 bits.

Not Answer

3

Suppose we have a source consisting of two symbols, and , with probabilities and .

(a) Using Arithmetic Coding, what are the resulting bitstrings, for input and ?

(b) A major advantage of adaptive algorithms is that no a priori knowledge of symbol probabilities is required.

(1) Propose an Adaptive Arithmetic Coding algorithm and briefly describe how it would work. For simplicity, let's assume both encoder and decoder know that exactly symbols will be sent each time.

(2) Assume the sequence of messages is initially , followed by the sequence is .

Show how your Adaptive Arithmetic Coding algorithm works, for this sequence, for encoding: (i) the first , (ii) the second , and (iii) the last .

(a) Using Arithmetic Coding, what are the resulting bitstrings, for input and ?

(b) A major advantage of adaptive algorithms is that no a priori knowledge of symbol probabilities is required.

(1) Propose an Adaptive Arithmetic Coding algorithm and briefly describe how it would work. For simplicity, let's assume both encoder and decoder know that exactly symbols will be sent each time.

(2) Assume the sequence of messages is initially , followed by the sequence is .

Show how your Adaptive Arithmetic Coding algorithm works, for this sequence, for encoding: (i) the first , (ii) the second , and (iii) the last .

Not Answer

4

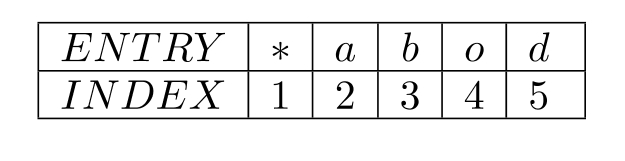

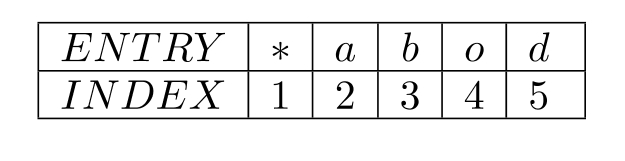

For the LZW algorithm, assume an alphabet a b o d of size 5 from which text characters are picked.

Suppose the following string of characters is to be transmitted (or stored):

The coder starts with an initial dictionary consisting of the above 5 characters and an index assignment, as in the following table:

Make a table showing the string being assembled, the current character, the output, the new symbol, and the index corresponding to the symbol, for the above input - only go as far as "d a b b a d a b b a * d".

Suppose the following string of characters is to be transmitted (or stored):

The coder starts with an initial dictionary consisting of the above 5 characters and an index assignment, as in the following table:

Make a table showing the string being assembled, the current character, the output, the new symbol, and the index corresponding to the symbol, for the above input - only go as far as "d a b b a d a b b a * d".

Unlock Deck

Unlock for access to all 11 flashcards in this deck.

Unlock Deck

k this deck

5

Consider an alphabet with three symbols , with probability . and .

State how you would go about plotting the entropy as a function of and . Pseudocode is perhaps the best way to state your answer.

State how you would go about plotting the entropy as a function of and . Pseudocode is perhaps the best way to state your answer.

Unlock Deck

Unlock for access to all 11 flashcards in this deck.

Unlock Deck

k this deck

6

Is the following code uniquely decodable?

Unlock Deck

Unlock for access to all 11 flashcards in this deck.

Unlock Deck

k this deck

7

Consider the question of whether it is true that the size of the Huffman code of a symbol with probability is always less than or equal to .

As a counter example, let the source alphabet be . Given and , construct a Huffman tree. Will the tree be different for different values for and ? What conclusion can you draw?

As a counter example, let the source alphabet be . Given and , construct a Huffman tree. Will the tree be different for different values for and ? What conclusion can you draw?

Unlock Deck

Unlock for access to all 11 flashcards in this deck.

Unlock Deck

k this deck

8

Suppose we wish to transmit the 10-character string "MULTIMEDIA". The characters in the string are chosen from a finite alphabet of 8 characters.

(a) What are the probabilities of each character in the source string?

(b) Compute the entropy of the source string.

(c) If the source string is encoded using Huffman coding, draw the encoding tree and compute the average number of bits needed.

(a) What are the probabilities of each character in the source string?

(b) Compute the entropy of the source string.

(c) If the source string is encoded using Huffman coding, draw the encoding tree and compute the average number of bits needed.

Unlock Deck

Unlock for access to all 11 flashcards in this deck.

Unlock Deck

k this deck

9

If the source string MULTIMEDIA is now encoded using Arithmetic coding, what is the codeword in fractional decimal representation. How many bits are needed for coding in binary format? How does this compare to the entropy?

Unlock Deck

Unlock for access to all 11 flashcards in this deck.

Unlock Deck

k this deck

10

Using the Lempel-Ziv-Welch (LZW) algorithm, encode the following source symbol sequence: MISSISSIPPI\#

Show initial codes as ASCII: e.g., "M". Start new codes with the code value 256.

(b) Assuming the source characters (ASCII) and the dictionary entry codes are combined and represented by a 9-bit code, calculate the total number of bits needed for encoding the source string up to the \# character, i.e. just MISSISSIPPI. What is the compression ratio, compared to simply using 8-bit ASCII codes for the input characters?

Show initial codes as ASCII: e.g., "M". Start new codes with the code value 256.

(b) Assuming the source characters (ASCII) and the dictionary entry codes are combined and represented by a 9-bit code, calculate the total number of bits needed for encoding the source string up to the \# character, i.e. just MISSISSIPPI. What is the compression ratio, compared to simply using 8-bit ASCII codes for the input characters?

Unlock Deck

Unlock for access to all 11 flashcards in this deck.

Unlock Deck

k this deck

11

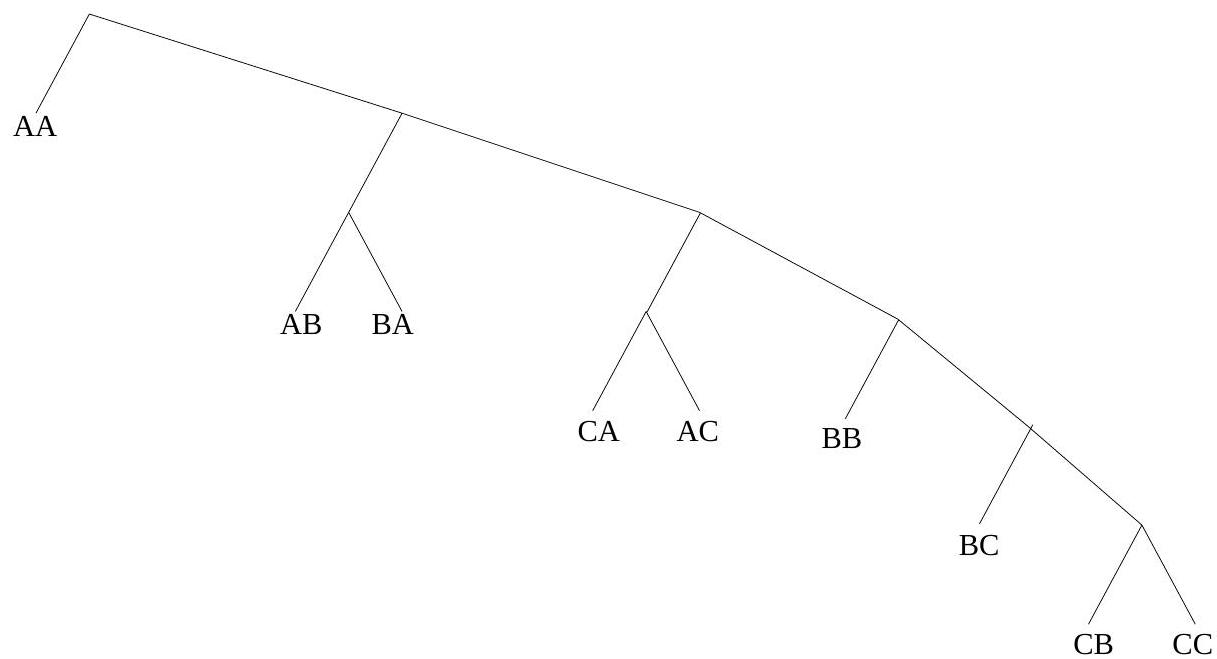

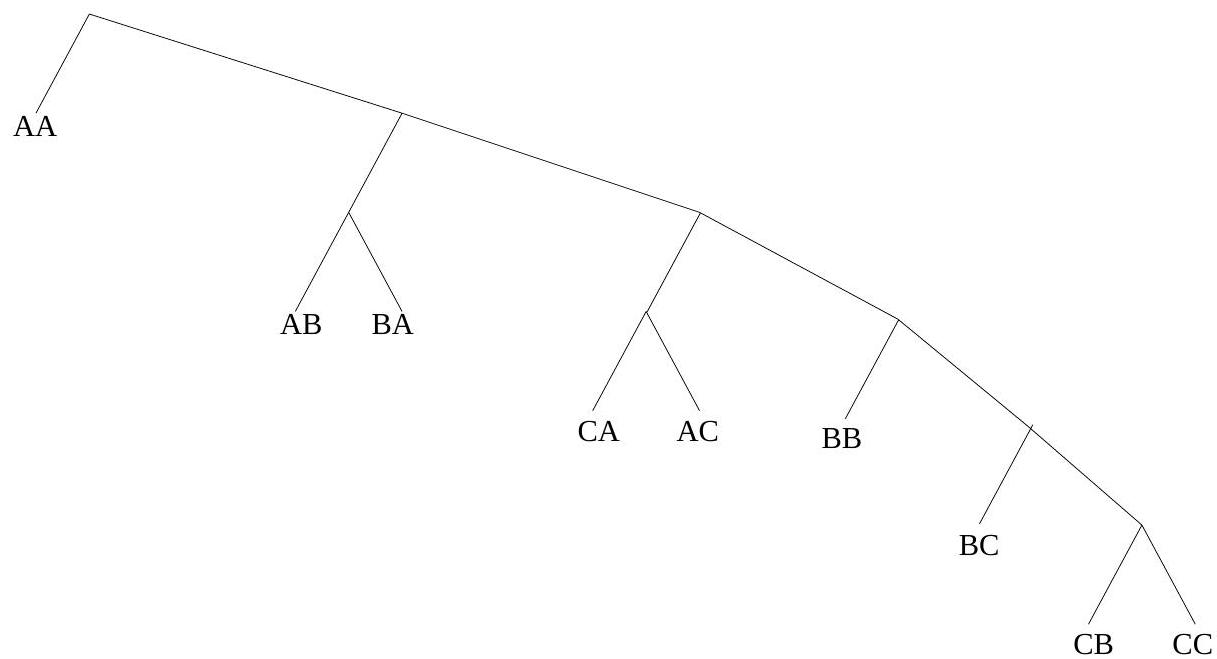

Construct a binary Huffman code for a source with three symbols and , having probabilities , and 0.1 , respectively. What is its average codeword length, in bits per symbol? What is the entropy of this source?

(b) Let's now extend this code by grouping symbols into 2-character groups - a type of VQ. Compare the performance now, in bits per original source symbol, with the best possible.

Fig. 7.1:

Fig. 7.1:

(b) Let's now extend this code by grouping symbols into 2-character groups - a type of VQ. Compare the performance now, in bits per original source symbol, with the best possible.

Fig. 7.1:

Fig. 7.1:

Unlock Deck

Unlock for access to all 11 flashcards in this deck.

Unlock Deck

k this deck